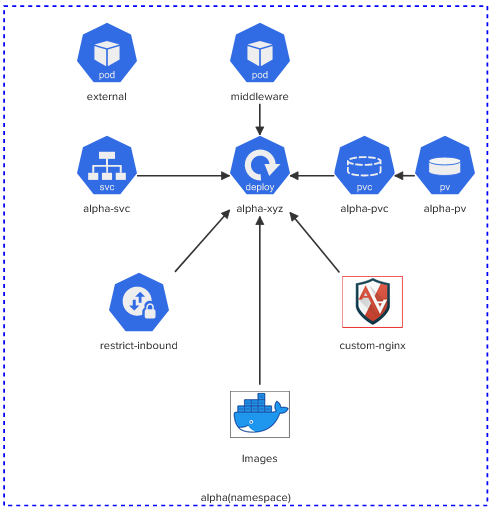

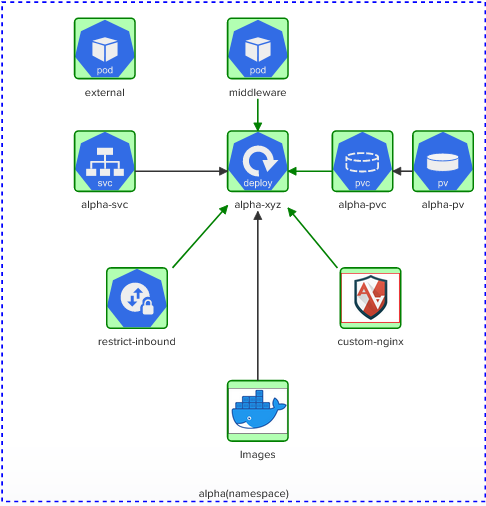

Here we’re going to see together how to solve a bugged Kubernetes architecture, thanks to a nice KodeKloud challenge, where:

- The persistent volume claim can’t be bound to the persistent volume

- Load the ‘AppArmor` profile called ‘custom-nginx’ and ensure it is enforced.

- The deployment alpha-xyz use an insecure image and needs to mount the ‘data volume’.

- ‘alpha-svc’ should be exposed on ‘port: 80’ and ‘targetPort: 80’ as ClusterIP

- Create a NetworkPolicy called ‘restrict-inbound’ in the ‘alpha’ namespace. Policy Type = ‘Ingress’. Inbound access only allowed from the pod called ‘middleware’ with label ‘app=middleware’. Inbound access only allowed to TCP port 80 on pods matching the policy

- ‘external’ pod should NOT be able to connect to ‘alpha-svc’ on port 80

1 Persistent Volume Claim

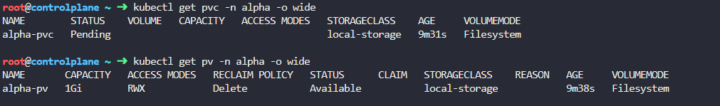

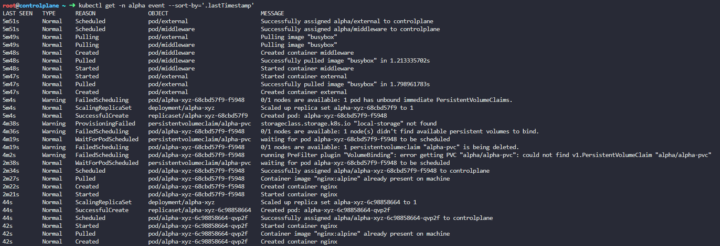

So first of all we notice the PVC is there but is pending, so let’s look into it

One of the first differences we notice is the kind of access which is ReadWriteOnce on the PVC while ReadWriteMany on the PV.

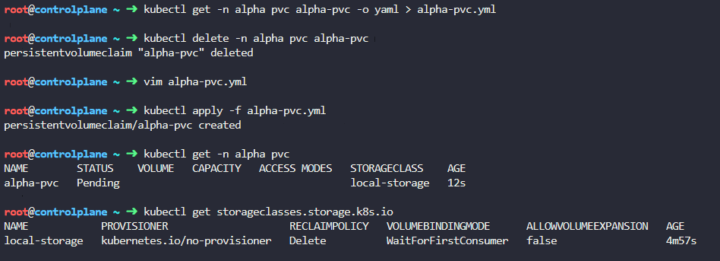

Also we want to check if that storage is present on the cluster.

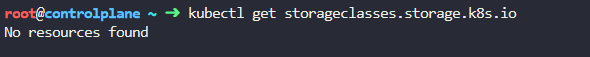

Let’s fix that creating a local-storage resource:

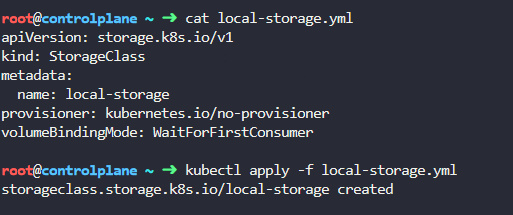

Get the PVC YAML, delete the extra lines and modify access mode:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

finalizers:

- kubernetes.io/pvc-protection

name: alpha-pvc

namespace: alpha

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: local-storage

volumeMode: Filesystem

Now the PVC is “waiting for first consumer”.. so let’s move to deployment fixing 🙂

https://kubernetes.io/docs/concepts/storage/persistent-volumes/#persistentvolumeclaims

https://kubernetes.io/docs/concepts/storage/storage-classes/#local

2 App Armor

Before fixing the deployment we need to load the App Armor profile, otherwise the pod won’t start.

To do this we move our profile inside /etc/app-arrmor.d and enable it enforced

- https://ubuntu.com/tutorials/beginning-apparmor-profile-development#1-overview

- https://kubernetes.io/docs/tutorials/security/apparmor/

3 DEPLOYMENT

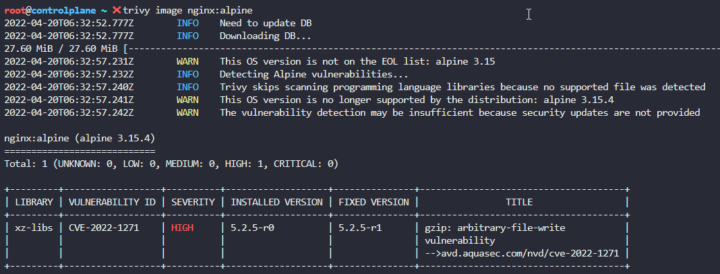

For this exercise the permitted images are: ‘nginx:alpine’, ‘bitnami/nginx’, ‘nginx:1.13’, ‘nginx:1.17’, ‘nginx:1.16’and ‘nginx:1.14’.

We use ‘trivy‘ to find the image with the least number of ‘CRITICAL’ vulnerabilities.

Let’s give it a look at what we have now

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: alpha-xyz

name: alpha-xyz

namespace: alpha

spec:

replicas: 1

selector:

matchLabels:

app: alpha-xyz

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: alpha-xyz

spec:

containers:

- image: ?

name: nginxWe can start scanning all our images to see that the most secure is the alpine version

So we can now fix the deployment in two ways

- put nginx:alpine image

- add alpha-pvc as a volume named ‘data-volume’

- insert the annotation for the app-armor profile created before

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: alpha-xyz

name: alpha-xyz

namespace: alpha

spec:

replicas: 1

selector:

matchLabels:

app: alpha-xyz

strategy: {}

template:

metadata:

labels:

app: alpha-xyz

annotations:

container.apparmor.security.beta.kubernetes.io/nginx: localhost/custom-nginx

spec:

containers:

- image: nginx:alpine

name: nginx

volumeMounts:

- name: data-volume

mountPath: /usr/share/nginx/html

volumes:

- name: data-volume

persistentVolumeClaim:

claimName: alpha-pvc

---4 SERVICE

We can be fast on this with one line

kubectl expose deployment alpha-xyz --type=ClusterIP --name=alpha-svc --namespace=alpha --port=80 --target-port=805 NETWORK POLICY

Here we want to apply

- over pods matching ‘alpha-xyz’ label

- only for incoming (ingress) traffic

- restrict it from pods labelled as ‘middleware’

- over port 80

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: restrict-inbound

namespace: alpha

spec:

podSelector:

matchLabels:

app: alpha-xyz

policyTypes:

- Ingress

ingress:

- from:

- podSelector:

matchLabels:

app: middleware

ports:

- protocol: TCP

port: 80

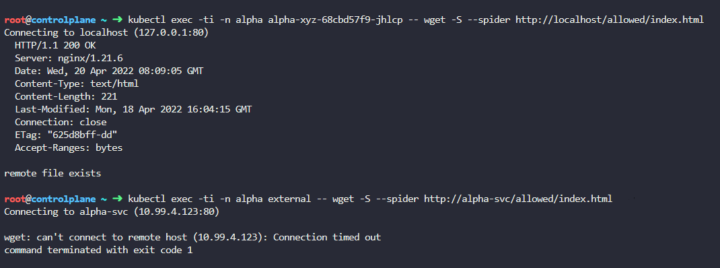

We can test now the route is closed between the external pod and the alpha-xyz

Done!

REFERENCES:

- https://kodekloud.com/courses/cks-challenges/

- https://unix.stackexchange.com/a/320496/130710

- https://github.com/tuxerrante/kubernetes-utils#init-commands-to-memorize

Lascia un commento