My goal is to call a service on an AKS cluster (aks1/US) from a pod on a second AKS cluster (aks2/EU).

These clusters will be on different regions and should communicate over a private network.

For the cluster networking I’m using the Azure CNI plugin.

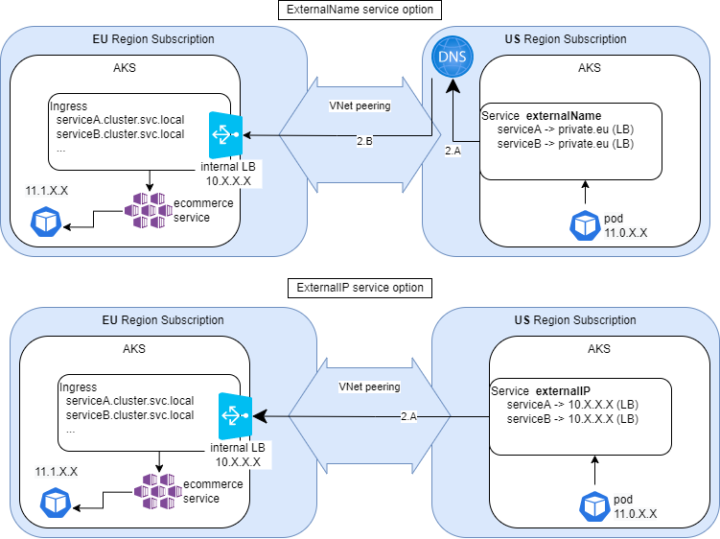

Above you can see a schema of the two possible ending architectures. ExternalName or ExternalIP service on the US AKS pointing to a private EU ingress controller IP.

So, after some reading and some video listening, it seemed for me that the best option was to use an externalName service on AKS2 calling a service defined in a custom private DNS zone (ecommerce.private.eu.dev), being these two VNets peered before.

Address space for aks services:

dev-vnet 10.0.0.0/14

=======================================

dev-test1-aks v1.22.4 - 1 node

dev-test1-vnet 11.0.0.0/16

=======================================

dev-test2-aks v1.22.4 - 1 node

dev-test2-vnet 11.1.0.0/16 After some trials I can get connectivity between pods networks but I was never able to reach the service network from the other cluster.

- I don’t have any active firewall

- I’ve peered all three networks: dev-test1-vnet, dev-test2-vnet, dev-vnet (services CIDR)

- I’ve create a Private DNS zones private.eu.dev where I’ve put the “ecommerce” A record (10.0.129.155) that should be resolved by the externalName service

dev-test1-aks (EU cluster):

kubectl create deployment eu-ecommerce --image=k8s.gcr.io/echoserver:1.4 --port=8080 --replicas=1

kubectl expose deployment eu-ecommerce --type=ClusterIP --port=8080 --name=eu-ecommerce

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.1.1/deploy/static/provider/cloud/deploy.yaml

kubectl create ingress eu-ecommerce --class=nginx --rule=eu.ecommerce/*=eu-ecommerce:8080This is the ingress rule:

❯ kubectl --context=dev-test1-aks get ingress eu-ecommerce-2 -o yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: eu-ecommerce-2

namespace: default

spec:

ingressClassName: nginx

rules:

- host: lb.private.eu.dev

http:

paths:

- backend:

service:

name: eu-ecommerce

port:

number: 8080

path: /ecommerce

pathType: Prefix

status:

loadBalancer:

ingress:

- ip: 20.xxxxxThis is one of the externalName I’ve tried on dev-test2-aks:

apiVersion: v1

kind: Service

metadata:

name: eu-services

namespace: default

spec:

type: ExternalName

externalName: ecommerce.private.eu.dev

ports:

- port: 8080

protocol: TCPThese are some of my tests:

# --- Test externalName

kubectl --context=dev-test2-aks run -it --rm --restart=Never busybox --image=gcr.io/google-containers/busybox -- wget -qO- http://eu-services:8080

: '

wget: cant connect to remote host (10.0.129.155): Connection timed out

'

# --- Test connectivity AKS1 -> eu-ecommerce service

kubectl --context=dev-test1-aks run -it --rm --restart=Never busybox --image=gcr.io/google-containers/busybox -- wget -qO- http://eu-ecommerce:8080

kubectl --context=dev-test1-aks run -it --rm --restart=Never busybox --image=gcr.io/google-containers/busybox -- wget -qO- http://10.0.129.155:8080

kubectl --context=dev-test1-aks run -it --rm --restart=Never busybox --image=gcr.io/google-containers/busybox -- wget -qO- http://eu-ecommerce.default.svc.cluster.local:8080

kubectl --context=dev-test1-aks run -it --rm --restart=Never busybox --image=gcr.io/google-containers/busybox -- wget -qO- http://ecommerce.private.eu.dev:8080

# OK client_address=11.0.0.11

# --- Test connectivity AKS2 -> eu-ecommerce POD

kubectl --context=dev-test2-aks run -it --rm --restart=Never busybox --image=gcr.io/google-containers/busybox -- wget -qO- http://11.0.0.103:8080

#> OK

# --- Test connectivity - LB private IP

kubectl --context=dev-test1-aks run -it --rm --restart=Never busybox --image=gcr.io/google-containers/busybox -- wget --no-cache -qO- http://lb.private.eu.dev/ecommerce

#> OK

kubectl --context=dev-test2-aks run -it --rm --restart=Never busybox --image=gcr.io/google-containers/busybox -- wget --no-cache -qO- http://lb.private.eu.dev/ecommerce

#> KO wget: can't connect to remote host (10.0.11.164): Connection timed out

#>> This is the ClusterIP! -> Think twice!

# --- Traceroute gives no informations

kubectl --context=dev-test2-aks run -it --rm --restart=Never busybox --image=gcr.io/google-containers/busybox -- traceroute -n -m4 ecommerce.private.eu.dev

: '

* * *

3 * * *

4 * * *

'

# --- test2-aks can see the private dns zone and resolve the hostname

kubectl --context=dev-test2-aks run -it --rm --restart=Never busybox --image=gcr.io/google-containers/busybox -- nslookup ecommerce.private.eu.dev

: ' Server: 10.0.0.10

Address 1: 10.0.0.10 kube-dns.kube-system.svc.cluster.local

Name: ecommerce.private.eu.dev

Address 1: 10.0.129.155

'I’ve also created inbound and outbound network policies for the AKS networks:

- on dev-aks (10.0/16) allow all incoming from 11.1/16 and 11.0/16

- on dev-test2-aks allow any outbound

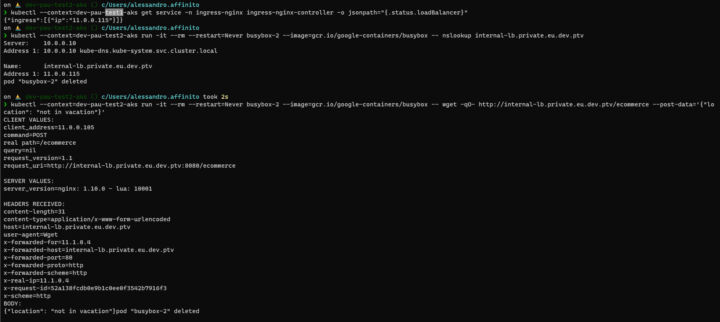

SOLUTION: Set the LB as an internal LB exposing the external IP to the private subnet

kubectl --context=dev-test1-aks patch service -n ingress-nginx ingress-nginx-controller --patch '{"metadata": {"annotations": {"service.beta.kubernetes.io/azure-load-balancer-internal": "tr

ue"}}}'

This article is also in Medium 🙂

Seen docs:

- https://docs.microsoft.com/en-us/azure/aks/private-clusters#virtual-network-peering

- https://kubernetes.io/docs/concepts/services-networking/service/#externalname

- https://docs.microsoft.com/en-us/azure/dns/private-dns-getstarted-portal#create-a-private-dns-zone

- https://docs.microsoft.com/en-us/azure/virtual-network/virtual-network-peering-overview

- Complete Overview of Azure Virtual Network Peering